Case Study 2¶

The App¶

This case study is about a deep research agent that creates a report based on a user query.

- Create N (configurable) questions to ask.

- Create X (configurable) search queries for each question.

- Use a SYNTHESISER agent to create the final report.

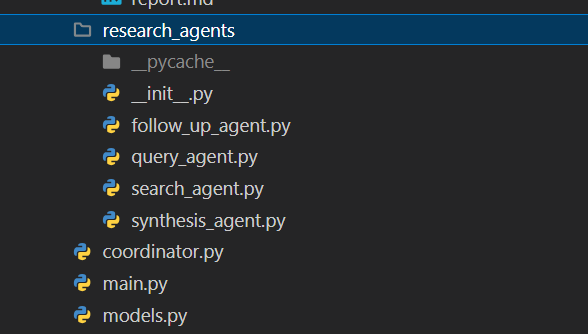

The coordinator.py has access to 4 agents, (follow_up_agent.py is not used).

Evals¶

We need to determine what our top level evals are:

For a given question, we get a report that is about the question, has the required length and answers the question. We can check length deterministically, we can check the quality firstly by human and then build our LLM judge. "A useful report"

We can have evals for the steps to make the report:

- Check N and X config values were followed.

- Evaluate the relevance of questions and queries in relation to the question.

- Did the article and retrieved content perform well on standard RAG evaluations - see Evals section.

Observabilty¶

Again, we find the LLM points and export to CSV the necessary data.

If we use EDD, Evaluation Driven Development, we will create code with the eval in mind - what do we want from the agent and how will we know it is doing it.

The datasets will be produced as part of the running code, helping devs to debug and test as well as allow QA to test and evaluate, before we do monitoring in production.